We hear this a lot in conversations about scaling. In this section we’re going to literally “just add another pod” and see what happens with our WordPress application in the Kubernetes cluster.

Note: all code samples from this section are available on GitHub.

Preparing MariaDB

From our previous sections you should be left off with a clean default namespace, no pods or services. OpenEBS should be installed and running with a working DiskPool and the Storage Classes for replicated and Hostpath volumes.

$ kubectl -n openebs get diskpool

NAME NODE STATE POOL_STATUS CAPACITY USED AVAILABLE

pool-k0 k0 Created Online 10724835328 0 10724835328

pool-k1 k1 Created Online 10724835328 0 10724835328

pool-k2 k2 Created Online 10724835328 0 10724835328

$ kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

mayastor-etcd-localpv openebs.io/local Delete WaitForFirstConsumer false 5d1h

mayastor-loki-localpv openebs.io/local Delete WaitForFirstConsumer false 5d1h

openebs-hostpath openebs.io/local Delete WaitForFirstConsumer false 5d1h

openebs-replicated io.openebs.csi-mayastor Delete Immediate true 4d23h

openebs-single-replica io.openebs.csi-mayastor Delete Immediate true 5d1h

We’re going to focus on “just adding another pod” to our WordPress application in this section, so let’s first get a working MariaDB service with a single pod and a replicated persistent volume.

We’re going to introduce a new concept here, a volumeClaimTemplates attribute in our StatefulSet spec. This attribute dynamically creates persistent volumes for pods managed by our StatefulSet. This allows us to ditch the previously used volume-clamis.yml manifest altogether, and have our mariadb.statefulset.yml file define its volume:

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes:

- ReadWriteOnce

storageClassName: openebs-replicated

resources:

requests:

storage: 1Gi

persistentVolumeClaimRetentionPolicy:

whenDeleted: Delete

whenScaled: Delete

This is similar to a regular persistent volume claim. We have a retention policy here as well, set to delete the volume claim when the StatefulSet is deleted or scaled. These are set to Retain by default to prevent any unintentional data loss, but Delete works best when you’re mostly testing around.

Also note that we’ve changed the name to data here, and the final PVC name will be prefixed with the name of the StatefulSet, resulting in claims named mariadb-data-0. This also requires a slight change in how we mount the volumes: the volumes section in the template spec is gone, the volumeMounts section in the container definition can use the “locally” defined claim name:

spec:

containers:

- name: mariadb

image: mariadb:10.11

ports:

- containerPort: 3306

volumeMounts:

- name: data

mountPath: /var/lib/mysql

Let’s apply the MariaDB manifests and observe the cluster:

$ kubectl apply \

-f mariadb.service.yml \

-f mariadb.configmap.yml \

-f mariadb.statefulset.yml

service/mariadb created

configmap/mariadb unchanged

statefulset.apps/mariadb created

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

mariadb-0 1/1 Running 0 29s

$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

data-mariadb-0 Bound pvc-b424e36a-8ecd-46ec-97d8-255cd0ab62b4 1Gi RWO openebs-replicated <unset> 35s

Let’s move on to our WordPress service.

Preparing WordPress

Just like with a MySQL or MariaDB service, a WordPress application will have some state: the WordPress core files, any plugins or themes we install, any media that we upload.

These are stored on the filesystem, and we’ll want to retain them in case of a pod crash or restart. We want to be able to run our pods anywhere in the cluster, so just like with MariaDB, we’ll use a replicated persistent volume for every WordPress pod.

For simplicity, let’s also disregard our previous Nginx deployment and service for now and switch back to using the Apache-based WordPress container image. This means our WordPress service will be the one exposed through a NodePort mapped to port 80 (rather than a FastCGI service on port 9000).

Let’s update our StatefulSet for WordPress wordpress.statefulset.yml to reflect all that:

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: wordpress

spec:

selector:

matchLabels:

app: wordpress

template:

metadata:

labels:

app: wordpress

Nothing new here so far.

spec:

containers:

- name: wordpress

image: wordpress:6.5

ports:

- containerPort: 80

volumeMounts:

- name: data

mountPath: /var/www/html

We changed the image here to the Apache-based container image on DockerHub. We also updated the volumeMounts name to reference a volume named data which we’ll use in our claim templates.

env:

- name: WORDPRESS_DB_HOST

value: mariadb

- name: WORDPRESS_DB_USER

valueFrom:

configMapKeyRef:

name: mariadb

key: username

- name: WORDPRESS_DB_NAME

valueFrom:

configMapKeyRef:

name: mariadb

key: database

- name: WORDPRESS_DB_PASSWORD

valueFrom:

configMapKeyRef:

name: mariadb

key: password

This section didn’t change much, we’re still reading some ConfigMaps, but do note that we removed a volumes section since claims are now going to be defined directly in the StatefulSet:

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes:

- ReadWriteOnce

storageClassName: openebs-replicated

resources:

requests:

storage: 1Gi

persistentVolumeClaimRetentionPolicy:

whenDeleted: Delete

whenScaled: Delete

Similar to the MariaDB specification, a single volume claim named data which will be prefixed with our StatefulSet name and form claims named wordpress-data-0, etc.

Our wordpress.service.yml file will also need a slight update for the new port:

apiVersion: v1

kind: Service

metadata:

name: wordpress

spec:

type: NodePort

ports:

- port: 80

nodePort: 30007

selector:

app: wordpress

Let’s create the Service and StatefulSet with kubectl and observe the running pods:

$ kubectl apply \

-f wordpress.service.yml \

-f wordpress.statefulset.yml

service/wordpress created

statefulset.apps/wordpress created

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

mariadb-0 1/1 Running 0 17m

wordpress-0 1/1 Running 0 39s

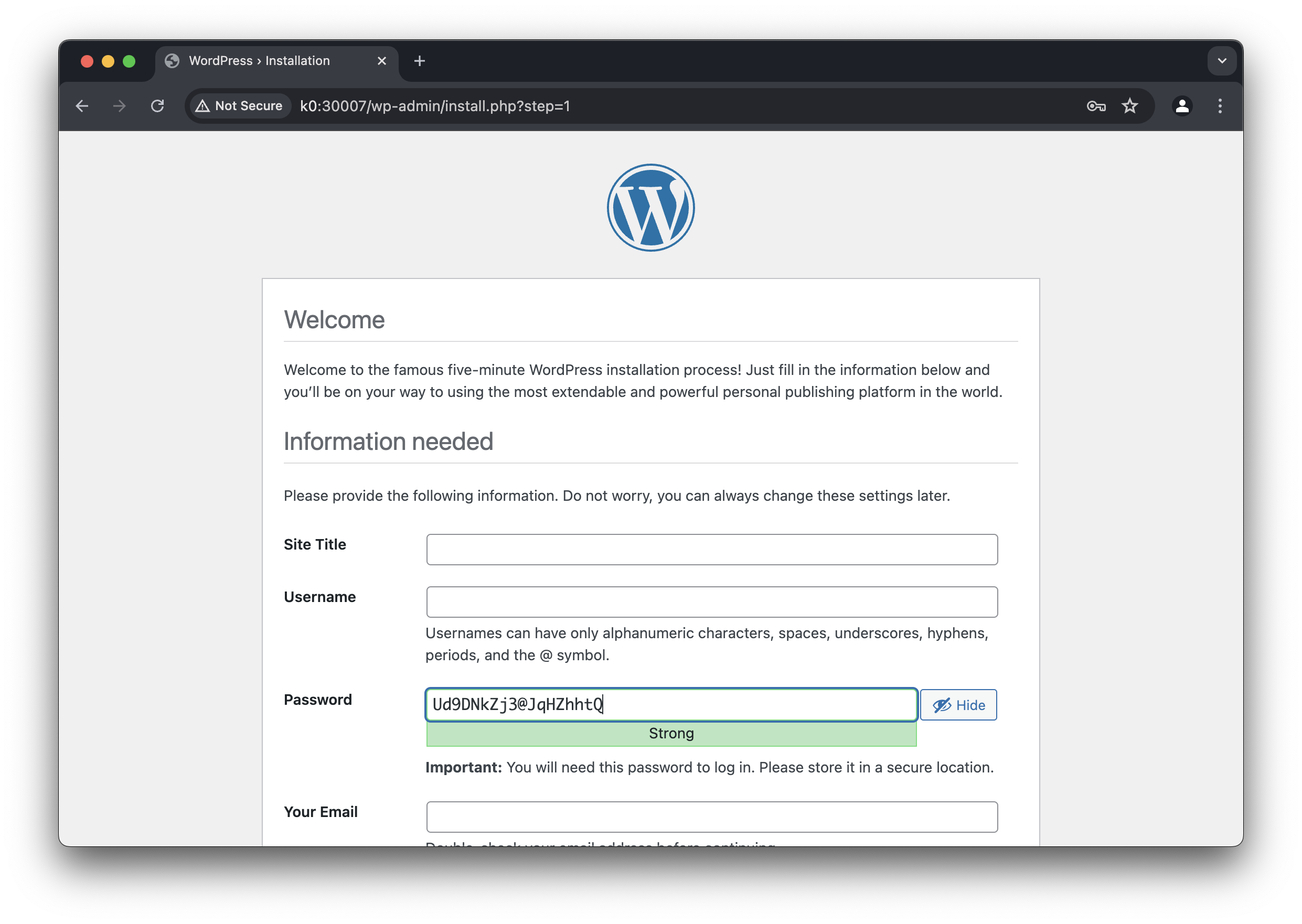

So far so good, we have a single-pod MariaDB database, and a single-pod WordPress application, which we can reach on any of our Node’s IP addresses or hostname on the port 30007:

Run through the installer and make sure you can login to wp-admin. Now comes the fun part.

Scaling WordPress

Scaling things up and down is incredibly easy in Kubernetes. Watch!

$ kubectl scale statefulset/wordpress --replicas=2

statefulset.apps/wordpress scaled

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

mariadb-0 1/1 Running 0 24m

wordpress-0 1/1 Running 0 7m59s

wordpress-1 1/1 Running 0 32s

There we have it! You’ve “successfully” scaled your WordPress application to two pods in Kubernetes. Don’t get too excited just yet though!

If you try and visit the site now, you’ll find that it almost works. However, if you login, you’ll find yourself logged out after a couple of refreshes. Why is that?

We won’t be diving too deep into how authentication in WordPress works, but worth knowing that the “logged in” state is shared between the MariaDB database and some cookies in your browser. Some of this data also happens to be hashed and salted.

These salts and some authentication related keys are usually defined in the wp-config.php file, but since we haven’t defined any, the WordPress container will generate them in the entrypoint script. This is good for security, but not good in our case, since the two pods are now operating with different sets of keys and salts.

Luckily for us, we can override these using environment variables passed to the container. Let’s create a new wordpress.secrets.yaml file and define a new Secret for our Kubernetes cluster. Secrets are very similar to ConfigMaps but treated slightly differently:

apiVersion: v1

kind: Secret

metadata:

name: wordpress-secrets

stringData:

WORDPRESS_AUTH_KEY: 7052c0863ea6269f44f0de17dc5c5879fdc02fd4

WORDPRESS_SECURE_AUTH_KEY: e769128cb8376581e309a002466b1346910e8bd3

WORDPRESS_LOGGED_IN_KEY: 9d3a12e80b9aa24c5a045f1f092e6ffb93f9823a

WORDPRESS_NONCE_KEY: 2b4d5a460c2dde65380a47188b4b5ebbe723ab18

WORDPRESS_AUTH_SALT: ba846d9aeefe6c706e5c93a750c1e4cd601e4408

WORDPRESS_SECURE_AUTH_SALT: 667895c8d69089620e44a7f1a97b1ac3efc6e386

WORDPRESS_LOGGED_IN_SALT: fcf21a031d5a54eab2c4749257829b0d2830ea7f

WORDPRESS_NONCE_SALT: 6f3a1db3c6bd211d404e071a0ad1801c53e117dd

These are just a few random hashes we generated:

$ head -n100 /dev/urandom | sha1sum

Note that Kubernetes Secrets like to accept base64 encoded data in a data attribute by default, but since we’re using unencoded strings, we use the stringData attribute instead.

Let’s also update our wordpress.statefulset.yml manifest to include these new keys in our environment variables, passed to the WordPress container:

spec:

containers:

- name: wordpress

image: wordpress:6.5-apache

ports:

- containerPort: 80

volumeMounts:

- name: data

mountPath: /var/www/html

envFrom:

- secretRef:

name: wordpress-secrets

env:

- name: WORDPRESS_DB_HOST

value: mariadb

The secretRef attribute is a very convenient of adding sensitive data to a container’s environment variables. Similarly, you can use configMapRef to add keys from a ConfigMap, we’ll leave that up to you for now.

Let’s add our Secret and updated StatefulSet to Kubernetes:

$ kubectl apply \

-f wordpress.secrets.yml \

-f wordpress.statefulset.yml

secret/wordpress-secrets created

statefulset.apps/wordpress configured

Our WordPress pods will be recreated after applying these manifests, and we’ll finally be able to login to the wp-admin Dashboard once, and have it work across all pods, since they are now all running with the same set of salts and keys.

Problem solved? Well not quite…

The Filesystem

Surely clicking around the admin, adding posts, pages, users and whatnot, will all work like a charm. This is because all that data is stored in the database, which is shared between our two pods. The filesystem, however, is a different story.

We have two pods, each with its own individual PersistentVolumeClaim and PersistentVolume:

$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

data-mariadb-0 Bound pvc-b424e36a-8ecd-46ec-97d8-255cd0ab62b4 1Gi RWO openebs-replicated <unset> 145m

data-wordpress-0 Bound pvc-8322e7ff-0cb1-4fc2-a696-806514709128 1Gi RWO openebs-replicated <unset> 129m

data-wordpress-1 Bound pvc-9359cb0d-2e17-488f-8e99-280b97b315a3 1Gi RWO openebs-replicated <unset> 121m

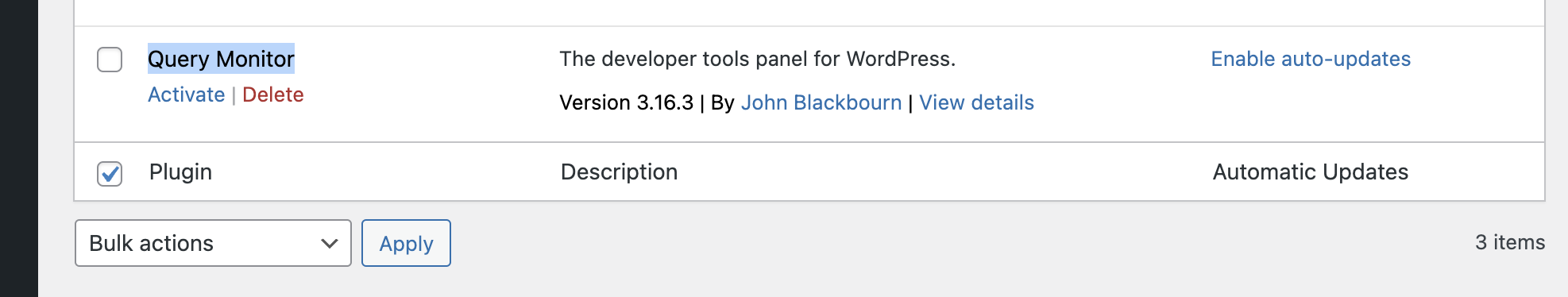

What will happen, if we add a new plugin to our WordPress install? Let’s try that out. Navigate to Plugins – Add New Plugin. Find one called Query Monitor (quite a useful one!) and install it, don’t activate it just yet though!

After a successful installation, browse to the plugins list, and hit the Refresh button in your browser a few times. You’ll see that it’s inconsistent – on some requests, the new Query Monitor plugin will be in the list, but on some requests it won’t be there.

What’s going on?

Plugins (and themes) in WordPress consist of one or more files in the wp-content/plugins (or themes) directory, so when you install a new on in the Dashboard, WordPress will download an archive and unpack it there. The problem though that this happens on just one lucky pod.

Let’s inspect the filesystem on each of the running pods to find out more:

$ kubectl exec -it wordpress-0 -- ls /var/www/html/wp-content/plugins

akismet hello.php index.php query-monitor

$ kubectl exec -it wordpress-1 -- ls /var/www/html/wp-content/plugins

akismet hello.php index.php

Okay, so in our case, the query-monitor plugin exists on the wordpress-0 pod, but missing on the wordpress-1 pod, which is why when our requests hits our second pod (remember our Service load balances between the two pods) the plugin is not there.

You can try activating the plugin and you might be lucky to get it working for one or two requests, but the moment you hit the pod where the plugin files do not exist, WordPress will automatically deactivate the plugin (assuming you’re still on the plugins list page).

We can certainly try and fix this problem by hand – i.e. by copying the plugin files from one pod to another – and that will work, right until we add a third pod and then a fourth pod. The same is true for themes, and even media uploads!

Going Stateless

State is what usually breaks horizontal scaling in web applications, and many modern apps are stateless by design, relying on databases, cache servers, shared filesystems or other remote storage services for state.

WordPress however is over twenty years old and very, very stateful: plugins, themes, media, translations, upgrades, caches and more! For all this to work, we’ll need to patch a few things up, and there will be a couple of tradeoffs along the way.

In this section, we’ve successfully just added another pod to our Kubernetes cluster, and it also worked to some extent. In the next section we’ll be looking at some options to remove state from a WordPress application to make sure we can scale out.

Feel free to remove all the services, stateful sets, secrets and config maps along with any persistent volumes and claims:

$ kubectl delete -f .

configmap "mariadb" deleted

service "mariadb" deleted

statefulset.apps "mariadb" deleted

secret "wordpress-secrets" deleted

service "wordpress" deleted

statefulset.apps "wordpress" deleted

Head over to the next section when ready!